Time to load some textures. For this project, I really wanted to play around with the DirectXTex library for loading files, and particularly the DDS format. The format is apparently used quite extensively in industry and has some pretty interesting advantages, like containing mipmaps and whole texture arrays in one file. As we’ll see, this can really simplify loading textures and creating resources.

Converting to DDS

If you have looked at my previous project, a simple Terrain Rendering engine, you know I used separate textures stored as PNG files for that project. I had to load each texture separately and then combine them into a texture array at run time. For this project, I’m combining them together into a DDS file containing the whole texture array. As with that project, I’m not using mipmaps. I’m also using a simplified version with no bump mapping. Given we are effectively looking down on this terrain from a distance, I don’t think we’ll be missing anything leaving the bump mapping out.

Adding mipmaps would probably improve performance and reduce visual artifacts from rendering too high resolution textures to too small a surface (ie, shimmering), but I’ve never worked with mipmaps before and don’t actually know at the moment whether I could automatically generate them from the existing textures I’m using. Also, I’m not sure about using them in the shaders. It could be this is basically all automated and I could add it for free, but I need to research it so I haven’t added it yet.

In order to convert from PNG to DDS, I downloaded the DirectXTex library source and compiled all of the projects. The Texassemble project is an extremely easy to use command line tool to combine image files into one DDS file. You just need to specify whether you want a cube map, volume map, or a texture array and make sure the files are all the same size. I didn’t have to go any further since I don’t care about compression and my images are already in a format that the simplified DirectXTK library we’ll be using in our project supports.

So what is DirectXTK? Where DirectXTex is a full image loading library plus stand alone tools, DirectXTK is a streamlined library designed to be simpler and lighter weight. It can’t handle as many formats, but is really easy to use.

Since I’m using Visual Studio, it turns out to be quite simple to add to my project as well. I just had to install the package using NuGet. Once the package is installed, I include DDSTextureLoader.h from my Terrain.cpp file and I can load and create my texture array with one line.

task<void> loadTexturesTask = createRasterizerTask.then([this]() {

DX::ThrowIfFailed(CreateDDSTextureFromFile(m_deviceResources->GetD3DDevice(), L"Textures\\terrainDiffuse.dds",

m_texDiffuseMaps.GetAddressOf(), m_srvDiffuseMaps.GetAddressOf()));

});

Well, that’s more than one line; but only because I wanted the load to be asynchronous. As you would expect, the Resource and Shader Resource View are both declared in the header file.

Microsoft::WRL::ComPtr<ID3D11Resource> m_texDiffuseMaps; Microsoft::WRL::ComPtr<ID3D11ShaderResourceView> m_srvDiffuseMaps;

I don’t think you actually need to define the ID3D11Resource at all unless you plan to access it from the CPU. I’ve seen the CreateDDSTextureFromFile() method called with that argument set to Null and it doesn’t seem to break anything. To set a shader to access the textures, you just need to set the Shader Resource View on Render.

context->PSSetShaderResources(0, 1, m_hmSRV.GetAddressOf()); context->PSSetShaderResources(1, 1, m_srvDiffuseMaps.GetAddressOf());

Alternatively to the above, you could (and probably should to minimize API calls) combine these into an array of SRVs.

ID3D11ShaderResourceView *views[2] = { m_hmSRV.Get(), m_srvDiffuseMaps.Get() };

context->PSSetShaderResources(0, 2, views);

That’s about it for loading the textures. Remember to update ReleaseDeviceDependentResources() as well.

Texture Splatting

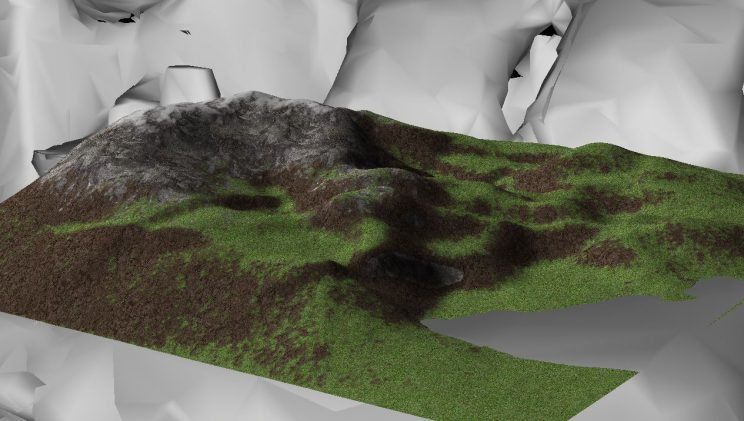

Now that we’ve bound the texture array to our Pixel Shader, we can update the Shader itself to use the new data. As I mentioned above, I’m using a slightly modified version of the texture splatting technique I used in my Rendering Terrain project.

To start, we’ll just test a single texture stretched across the terrain. We already had thought ahead and defined (u, v) coordinates for our terrain mesh, so we can use them to sample the texture. We need to set a third value to define which index to sample from.

I’m also including the declarations for the Texture array and SamplerState in the code snippet.

Texture2DArray<float4> diffuseMaps : register(t1);

SamplerState diffsampler : register(s1) {

Filter = MIN_MAG_MIP_LINEAR;

AddressU = Wrap;

AddressV = Wrap;

};

float3 color = diffuseMaps.Sample(diffsampler, float3(input.uv, 2).rgb;

I need to specify the .rgb at the end because our textures contain a depth map in the alpha channel. We’ll use that later.

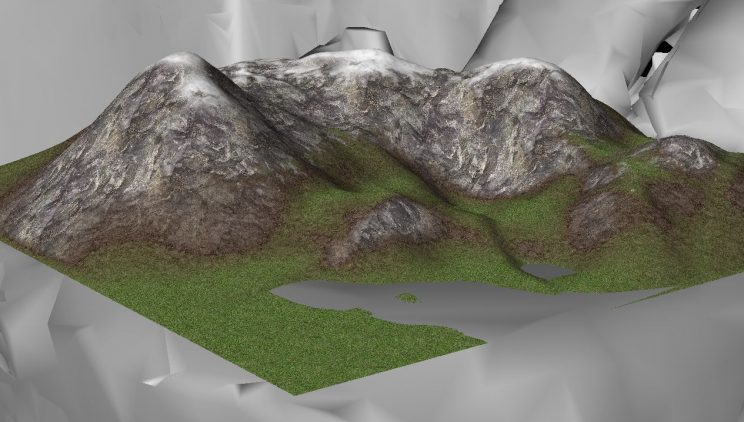

This looks like its functioning as expected.

Let’s try tiling this texture. We should be able to tile it by simply multiplying input.uv by however many times we want to tile the texture across the surface. Let’s try 2.

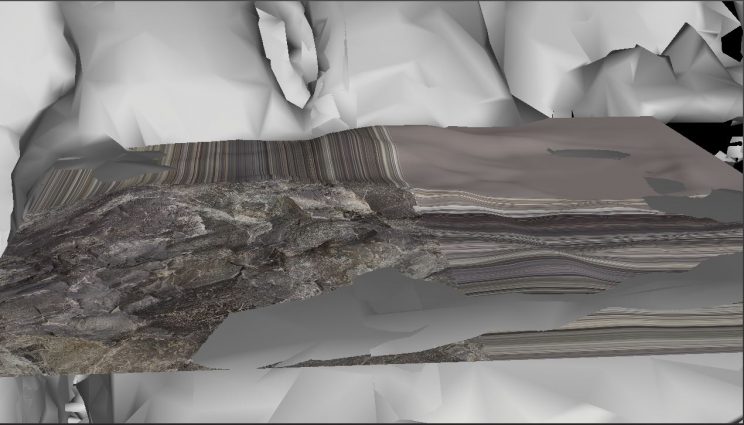

It appears that our texture sampling is not wrapping; however, we did set the SamplerState’s U and V address modes to Wrap. So why didn’t it work? It turns out I was incorrectly using syntax from the deprectated Effects framework. With the removal of D3DX, we’re not supposed to define the SamplerState in our shaders. We should be declaring them in the shaders and defining them on the CPU and mapping them at run time.

// in PixelShader.hlsl Texture2D<float> heightmap : register(t0); Texture2DArray<float4> diffuseMaps : register(t1); SamplerState hmsampler : register(s0); SamplerState diffsampler : register(s1);

// in Terrain.cpp

// CreateDeviceDependentResources()

loadTexturesTask.then([this]() {

// height map sampler

CD3D11_SAMPLER_DESC descSampler = CD3D11_SAMPLER_DESC(CD3D11_DEFAULT());

auto dev = m_deviceResources->GetD3DDevice();

DX::ThrowIfFailed(dev->CreateSamplerState(&descSampler, m_samplerHeightMap.GetAddressOf()));

// texture sampler

descSampler.AddressU = D3D11_TEXTURE_ADDRESS_WRAP;

descSampler.AddressV = D3D11_TEXTURE_ADDRESS_WRAP;

DX::ThrowIfFailed(dev->CreateSamplerState(&descSampler, m_samplerTexture.GetAddressOf()));

m_loadingComplete = true;

});

// Render

// attach sample states

ID3D11SamplerState *samplers[2] = { m_samplerHeightMap.Get(), m_samplerTexture.Get() };

context->PSSetSamplers(0, 2, samplers);

By correcting this, we can now tile our textures properly.

From here, let’s actually work on getting Texture Splatting working. The first change we need to make is that since this method of Texture Splatting will blend by both slope and height, we actually need to know what the height of the terrain is at the pixel. Since we already look up the height in the Vertex Shader to correctly update the position variable, we can also save an additional height variable per vertex and that will get correctly interpolated for each pixel. Alternatively, we could sample the height map in the Pixel Shader, but the results will be essentially the same and another per-pixel sample is more expensive than the per-vertex sample.

I then copied over the code from the Render Terrain project. I won’t reproduce it here as I’ve changed it and will provide the latest version in a couple of paragraphs. It uses the technique presented here to blend textures by height, and then uses a simple linear interpolation to blend by slope.

After I got this working, I realized it had never occurred to me to try using the same Blend technique for the slope as for the height. I decided to try making that change here to see how it looks; and it turns out I really like it.

I’ll have to try this change in the other project and see how it looks in an FPS, but it looks great from an overhead view like this.

Here’s the entire Pixel Shader. It’s reasonably straight forward, or has been explained elsewhere, so I won’t say anything more about it here.

// Per-pixel color data passed through the pixel shader.

struct PixelShaderInput

{

min16float4 pos : SV_POSITION;

min16float2 uv : TEXCOORD0;

float height : TEXCOORD1;

};

Texture2D<float> heightmap : register(t0);

Texture2DArray<float4> diffuseMaps : register(t1);

SamplerState hmsampler : register(s0);

SamplerState diffsampler : register(s1);

float4 Blend(float4 tex1, float blend1, float4 tex2, float blend2) {

float depth = 0.2f;

float ma = max(tex1.a + blend1, tex2.a + blend2) - depth;

float b1 = max(tex1.a + blend1 - ma, 0);

float b2 = max(tex2.a + blend2 - ma, 0);

return (tex1 * b1 + tex2 * b2) / (b1 + b2);

}

float4 GetTexByHeightPlanar(float height, float2 uv, float low, float med, float high) {

float bounds = 0.05f;

float transition = 0.2f;

float lowBlendStart = transition - 2 * bounds;

float highBlendEnd = transition + 2 * bounds;

float4 c;

if (height < lowBlendStart) {

c = diffuseMaps.Sample(diffsampler, float3(uv, low));

}

else if (height < transition) {

float4 c1 = diffuseMaps.Sample(diffsampler, float3(uv, low));

float4 c2 = diffuseMaps.Sample(diffsampler, float3(uv, med));

float blend = (height - lowBlendStart) * (1.0f / (transition - lowBlendStart));

c = Blend(c1, 1 - blend, c2, blend);

}

else if (height < highBlendEnd) {

float4 c1 = diffuseMaps.Sample(diffsampler, float3(uv, med));

float4 c2 = diffuseMaps.Sample(diffsampler, float3(uv, high));

float blend = (height - transition) * (1.0f / (highBlendEnd - transition));

c = Blend(c1, 1 - blend, c2, blend);

}

else {

c = diffuseMaps.Sample(diffsampler, float3(uv, high));

}

return c;

}

float3 GetTexBySlope(float slope, float height, float2 uv) {

float4 c;

float blend;

if (slope < 0.6f) {

blend = slope / 0.6f;

float4 c1 = GetTexByHeightPlanar(height, uv, 0, 2, 3);

float4 c2 = GetTexByHeightPlanar(height, uv, 1, 2, 3);

c = Blend(c1, 1 - blend, c2, blend);

}

else if (slope < 0.7f) {

blend = (slope - 0.6f) * (1.0f / (0.7f - 0.6f));

float4 c1 = GetTexByHeightPlanar(height, uv, 1, 2, 3);

float4 c2 = diffuseMaps.Sample(diffsampler, float3(uv, 2));

c = Blend(c1, 1 - blend, c2, blend);

}

else {

c = diffuseMaps.Sample(diffsampler, float3(uv, 2));

}

return c.rgb;

}

float3 estimateNormal(float2 texcoord) {

float2 b = texcoord + float2(0.0f, -0.01f);

float2 c = texcoord + float2(0.01f, -0.01f);

float2 d = texcoord + float2(0.01f, 0.0f);

float2 e = texcoord + float2(0.01f, 0.01f);

float2 f = texcoord + float2(0.0f, 0.01f);

float2 g = texcoord + float2(-0.01f, 0.01f);

float2 h = texcoord + float2(-0.01f, 0.0f);

float2 i = texcoord + float2(-0.01f, -0.01f);

float zb = heightmap.SampleLevel(hmsampler, b, 0).x * 50;

float zc = heightmap.SampleLevel(hmsampler, c, 0).x * 50;

float zd = heightmap.SampleLevel(hmsampler, d, 0).x * 50;

float ze = heightmap.SampleLevel(hmsampler, e, 0).x * 50;

float zf = heightmap.SampleLevel(hmsampler, f, 0).x * 50;

float zg = heightmap.SampleLevel(hmsampler, g, 0).x * 50;

float zh = heightmap.SampleLevel(hmsampler, h, 0).x * 50;

float zi = heightmap.SampleLevel(hmsampler, i, 0).x * 50;

float x = zg + 2 * zh + zi - zc - 2 * zd - ze;

float y = 2 * zb + zc + zi - ze - 2 * zf - zg;

float z = 8.0f;

return normalize(float3(x, y, z));

}

min16float4 main(PixelShaderInput input) : SV_TARGET {

float3 norm = estimateNormal(input.uv);

float3 color = GetTexBySlope(acos(norm.z), input.height, input.uv * 10);

float3 light = normalize(float3(1.0f, -0.5f, -1.0f));

float diff = saturate(dot(norm, -light));

return min16float4(saturate(color * (diff + 0.1f)), 1.0f);

}

That’s it for today. The current plan is to add the ability to delete the terrain and choose a different surface to mount a new one to. I don’t know that that will really warrant its own post, so I may just tack it on to a post about adding an interface for mucking with some of the important project variables. We’ll see.

For the latest version of the code, see GitHub.

Traagen