A four month delay since my last post. I had a pretty bad case of the Winter Doldrums, but I’m trying to get back on track now. I’ve spent the last week working on getting the code to load and render the surface meshes working. Most of that time was eaten up making stupid mistakes and then trying to figure out what I did wrong.

I won’t go into too much detail about the mistakes I made. They amount to copying and pasting code without actually reading it to see what it does. For instance, we have a class called CameraResources in our Common folder. This class is one supplied by Microsoft to control, you guessed it, the Camera. When I was trying to load the surface meshes, I was having some trouble getting the code to work, so I just started copying in everything from Microsoft’s Spatial Mapping demo. When all else failed, I copied the Common files too. It turns out there actually was a difference in this file. The original file used a ViewProjectionConstantBuffer structure that looked like this:

// Constant buffer used to send the view-projection matrices to the shader pipeline.

struct ViewProjectionConstantBuffer

{

DirectX::XMFLOAT4X4 viewProjection[2];

};

The new file not only changed this, but also moved the declaration to ShaderStructures.h, located in the Content folder. That last move was odd to me. Why would a Common file rely on a Content file? I may switch that back, but for now I followed suit. Here’s the new structure:

// Constant buffer used to send the view-projection matrices to the shader pipeline.

struct ViewProjectionConstantBuffer

{

DirectX::XMFLOAT4 cameraPosition;

DirectX::XMFLOAT4 lightPosition;

DirectX::XMFLOAT4X4 viewProjection[2];

};

That’s not a big change, but it was the source of tons of problems for me. When I first copied the RealtimeSurfaceMeshRender and SurfaceMesh classes, and their requisite shaders, into my program from the sample, I didn’t include the CameraResources class change. The result? I could tell that the meshes were being loaded, but they weren’t being rendered because the Camera was loading the original Constant Buffer structure while the shaders were using the new one.

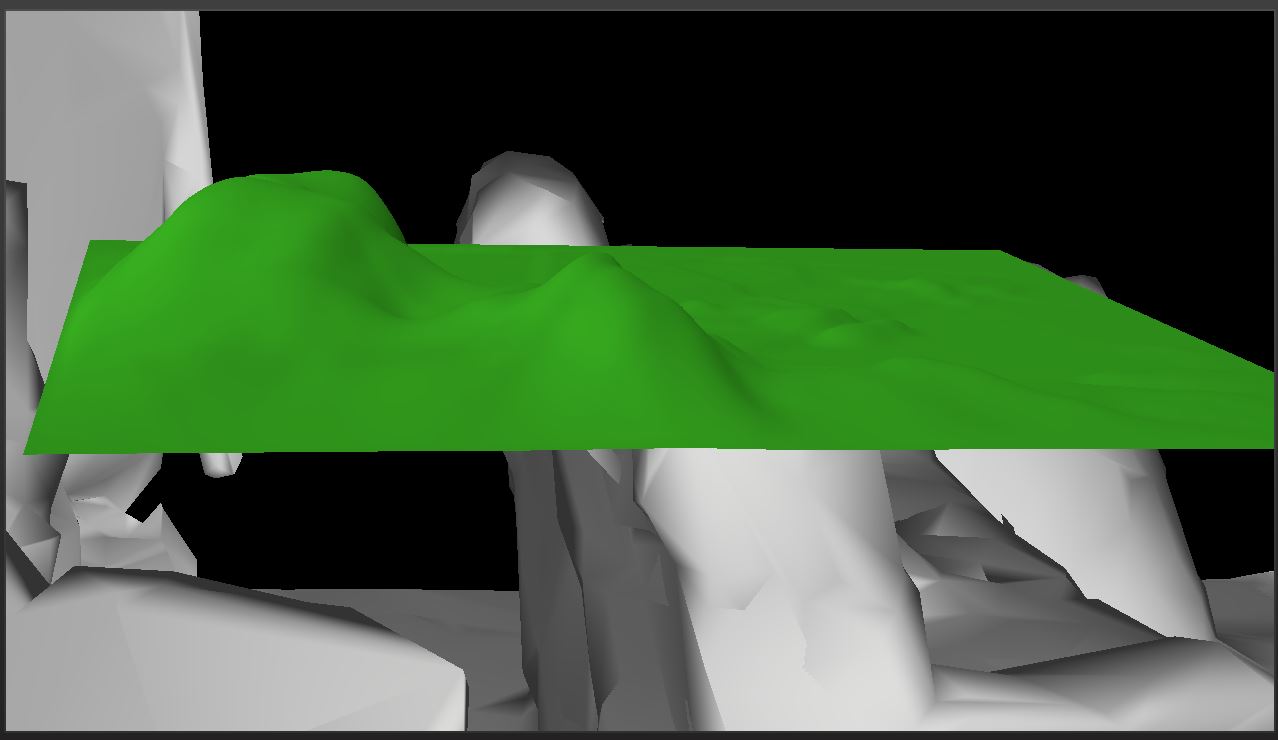

When I updated the CameraResources class, I did so without bothering to think about what the changes actually did. Thus, I didn’t update the shaders for my Terrain class to use the new Constant Buffer. Suddenly, the surface meshes started rendering perfectly, but my terrain was completely messed up.

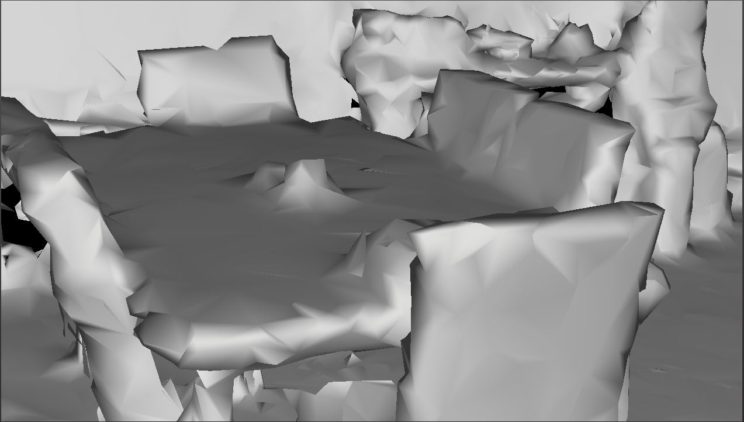

How the terrain looked after updating the CameraResources class. The surface mesh rendering is disabled for this shot.

The most embarrassing part about this is that when I was trying to figure out what I had done wrong, I assumed it was anything BUT the CameraResources class. I knew it had to be something to do with the View Projection matrix, but I thought it had to do with a change in the reference frame used. We were using a stationary frame of reference, but now we are using an attached frame of reference. This just means that our frame of reference updates as we look and move around. I went through the trouble of switching back to a stationary reference frame, only to find it made exactly no difference to either the terrain or the surface meshes. I wound up sticking with the attached frame of reference because it will be handy if I want to add a GUI of some sort. That GUI will need to be rendered relative to the camera, and the attached frame of reference gives us the coordinate system we need to do that.

The fix, for now, was to update the Constant Buffer used by the Terrain’s shaders.

Any way, after saying I wouldn’t waste time going into detail about my mistakes, I’ve blown 600 words on the topic. Let’s actually dig in to how to load surface meshes.

Frames of Reference

While we’re on the subject, why don’t I go over the changes involved in updating to an attached frame of reference.

We need to change the type of our m_referenceFrame member in the Main class.

Windows::Perception::Spatial::SpatialStationaryFrameOfReference^ m_referenceFrame; // This Windows::Perception::Spatial::SpatialLocatorAttachedFrameOfReference^ m_referenceFrame; // Becomes this.

Changing the type means we also need to change how we initialize the variable. This line is located in our Main class’ SetHolographicSpace() method.

m_referenceFrame = m_locator->CreateStationaryFrameOfReferenceAtCurrentLocation(); // Old m_referenceFrame = m_locator->CreateAttachedFrameOfReferenceAtCurrentHeading(); // New

In our Update() and Render() methods, and anywhere else we need the coordinate system, we need to change the following line:

SpatialCoordinateSystem^ currentCoordinateSystem = m_referenceFrame->CoordinateSystem; // Old SpatialCoordinateSystem^ currentCoordinateSystem = m_referenceFrame->GetStationaryCoordinateSystemAtTimestamp(prediction->Timestamp); // New

You’ll notice that we are using a prediction (HolographicFramePrediction type) to find the current stationary reference frame for a given frame. I read about this concept of predictive tracking some time ago with regards to the Oculus Rift. The idea is that it takes too long to read and update our actual position and render the scene, so we instead predict the position and orientation of the camera each frame based on the already measured movement. You can read more about this here and here.

With this changed, I don’t exactly want my terrain to be floating around in front of you as you move and look around. I want it to be locked in one place, eventually to one of those surfaces we’re trying to find.

Microsoft provides us with a reasonably simple way to deal with this. We have a type called a SpatialAnchor. I’ve added a member variable to our Terrain class. You can also save and load these from the SpatialAnchorStore, but I’m not going to worry about that for this project. I don’t really care about persistence or sharing.

I decided to pass my Anchor in as a variable to the constructor:

m_terrain = std::make_unique<Terrain>(m_deviceResources, 0.5f, 0.5f, 4, SpatialAnchor::TryCreateRelativeTo(currentCoordinateSystem));

That TryCreateRelativeTo() function creates an anchor relative to the current frame’s coordinate system. Note that this can fail as there is a maximum number of anchors. I haven’t done anything to protect against this as I’m only creating one anchor.

Once you have successfully created a Spatial Anchor, you can transform it to a different coordinate system (like say, from a later frame) with the following:

// Transform to the correct coordinate system from our anchor's coordinate system.

auto tryTransform = m_anchor->CoordinateSystem->TryGetTransformTo(coordinateSystem);

XMMATRIX transform;

if (tryTransform) {

// If the transform can be acquired, this spatial mesh is valid right now and

// we have the information we need to draw it this frame.

transform = XMLoadFloat4x4(&tryTransform->Value);

} else {

// just use the identity matrix if we can't load the transform for some reason.

transform = XMMatrixIdentity();

}

This matrix can then be combined with any other transformation matrices required for the hologram we’re rendering.

// Get the translation matrix. const XMMATRIX modelTranslation = XMMatrixTranslationFromVector(XMLoadFloat3(&m_position)); // The view and projection matrices are provided by the system; they are associated // with holographic cameras, and updated on a per-camera basis. // Here, we provide the model transform for the sample hologram. The model transform // matrix is transposed to prepare it for the shader. XMStoreFloat4x4(&m_modelConstantBufferData.modelToWorld, XMMatrixTranspose(modelTranslation * transform));

I should mention that the SurfaceMesh class provided by Microsoft doesn’t use Spatial Anchors. It appears that the provided surface meshes are using an internal coordinate system. Each SpatialSurfaceMesh object has a publicly accessible CoordinateSystem member variable.

That’s about all I think is useful to know at this stage about Reference Frames. If you want to read more, see this MSDN page.

Finding Surface Meshes

I may mess around with my code a bit later to see if I can do less in the Update() method, but for now I’m using pretty much exactly the same setup as found in the Spatial Mapping demo.

We’ll need a few new variables in our Main class to track our state:

Windows::Perception::Spatial::Surfaces::SpatialSurfaceObserver^ m_surfaceObserver; bool m_surfaceAccessAllowed = false; bool m_spatialPerceptionAccessRequested = false; Windows::Perception::Spatial::Surfaces::SpatialSurfaceMeshOptions^ m_surfaceMeshOptions; // A data handler for surface meshes. std::unique_ptr<RealtimeSurfaceMeshRenderer> m_meshRenderer;

the Booleans are just for tracking (as the names suggest), whether we’ve requested access to the surface meshes and whether we were granted access. The HoloLens automatically tracks it’s location and generates these surface meshes, but in order for an App to make use of that data, it must be granted access by the User. This is automatic in the emulator, but will cause a security popup on the real thing.

The below code basically says that if we haven’t already initialized a Surface Observer, we need to request access to the surface data. If that access is granted, then we can continue.

if (!m_surfaceObserver) {

// Initialize the Surface Observer using a valid coordinate system.

if (!m_spatialPerceptionAccessRequested) {

// The spatial mapping API reads information about the user's environment. The user must

// grant permission to the app to use this capability of the Windows Holographic device.

auto initSurfaceObserverTask = create_task(SpatialSurfaceObserver::RequestAccessAsync());

initSurfaceObserverTask.then([this, currentCoordinateSystem](Windows::Perception::Spatial::SpatialPerceptionAccessStatus status) {

if (status == SpatialPerceptionAccessStatus::Allowed) {

m_surfaceAccessAllowed = true;

}

});

m_spatialPerceptionAccessRequested = true;

}

}

Once we are granted access to the surface data, we can generate a Surface Observer to actually pull the mesh data.

The first thing the sample code does is create a bounding box to specify the region of the world we want the surface data for. The sample uses a 20x20x5 meter box for this, but we could use pretty much any bounding volume we wanted, including the view frustum.

SpatialBoundingBox aabb = {

{ 0.f, 0.f, 0.f },

{ 20.f, 20.f, 5.f },

};

SpatialBoundingVolume^ bounds = SpatialBoundingVolume::FromBox(currentCoordinateSystem, aabb);

The sample code also sets a few mesh options, saving them to the m_surfaceMeshOptions member variable. My tests show that the values currently set are actually the defaults, so we probably don’t need to set them as long as we’re happy with these values. That being said, it can’t hurt to set them, just to be sure.

// First, we'll set up the surface observer to use our preferred data formats.

// In this example, a "preferred" format is chosen that is compatible with our precompiled shader pipeline.

m_surfaceMeshOptions = ref new SpatialSurfaceMeshOptions();

IVectorView<DirectXPixelFormat>^ supportedVertexPositionFormats = m_surfaceMeshOptions->SupportedVertexPositionFormats;

unsigned int formatIndex = 0;

if (supportedVertexPositionFormats->IndexOf(DirectXPixelFormat::R16G16B16A16IntNormalized, &formatIndex)) {

m_surfaceMeshOptions->VertexPositionFormat = DirectXPixelFormat::R16G16B16A16IntNormalized;

}

IVectorView<DirectXPixelFormat>^ supportedVertexNormalFormats = m_surfaceMeshOptions->SupportedVertexNormalFormats;

if (supportedVertexNormalFormats->IndexOf(DirectXPixelFormat::R8G8B8A8IntNormalized, &formatIndex)) {

m_surfaceMeshOptions->VertexNormalFormat = DirectXPixelFormat::R8G8B8A8IntNormalized;

}

Oddly, this variable isn’t actually getting used anywhere. It isn’t used at all anywhere in the Main class and it never gets passed in to the RealtimeSurfaceMeshRenderer class. I have not yet moved or removed it, but I will be moving it to the RealtimeSurfaceMeshRenderer::AddOrUpdateSurfaceAsync() method, which is the only place a SpatialSurfaceMeshOptions variable is used. I mention it here only for the sake of covering the current state of the project.

Now we can finally get the surfaces.

// Create the observer.

m_surfaceObserver = ref new SpatialSurfaceObserver();

if (m_surfaceObserver) {

m_surfaceObserver->SetBoundingVolume(bounds);

// If the surface observer was successfully created, we can initialize our

// collection by pulling the current data set.

auto mapContainingSurfaceCollection = m_surfaceObserver->GetObservedSurfaces();

for (auto const& pair : mapContainingSurfaceCollection) {

auto const& id = pair->Key;

auto const& surfaceInfo = pair->Value;

m_meshRenderer->AddSurface(id, surfaceInfo);

}

This code is pretty straight forward. We initialize our Surface Observer and set the bounding volume. After that, we request a collection of any surfaces found within that volume.

I originally tried to implement this myself within the SetHolographicSpace() method, but I would often get an empty set back. Since the Sample code does this in the Update() method, I am as well. We then simply pass each surface to the m_meshRenderer object to load and save.

How the HoloLens divides space up into these surfaces, I can’t say. I thought I read somewhere that each surface mesh covered a certain volume of space, but I can’t find that reference anymore.

The following method is the workhorse of the RealtimeSurfaceMeshRenderer class. Ultimately, this saves and updates surface meshes.

Concurrency::task<void> RealtimeSurfaceMeshRenderer::AddOrUpdateSurfaceAsync(Guid id, SpatialSurfaceInfo^ newSurface)

{

auto options = ref new SpatialSurfaceMeshOptions();

options->IncludeVertexNormals = true;

// The level of detail setting is used to limit mesh complexity, by limiting the number

// of triangles per cubic meter.

auto createMeshTask = create_task(newSurface->TryComputeLatestMeshAsync(m_maxTrianglesPerCubicMeter, options));

auto processMeshTask = createMeshTask.then([this, id](SpatialSurfaceMesh^ mesh)

{

if (mesh != nullptr)

{

std::lock_guard<std::mutex> guard(m_meshCollectionLock);

auto& surfaceMesh = m_meshCollection[id];

surfaceMesh.UpdateSurface(mesh);

surfaceMesh.SetIsActive(true);

}

}, task_continuation_context::use_current());

return processMeshTask;

}

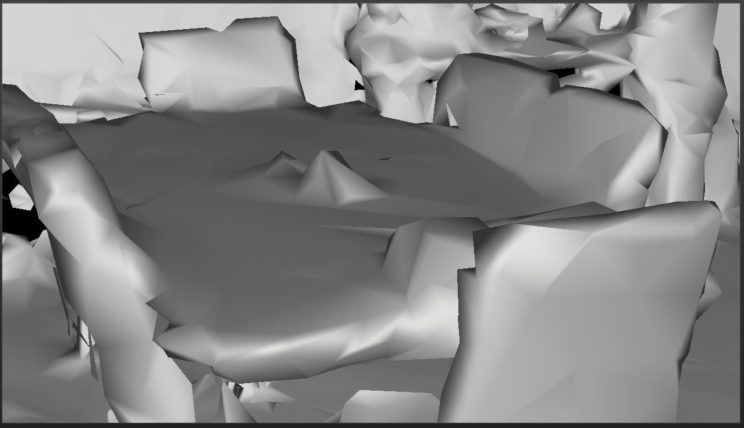

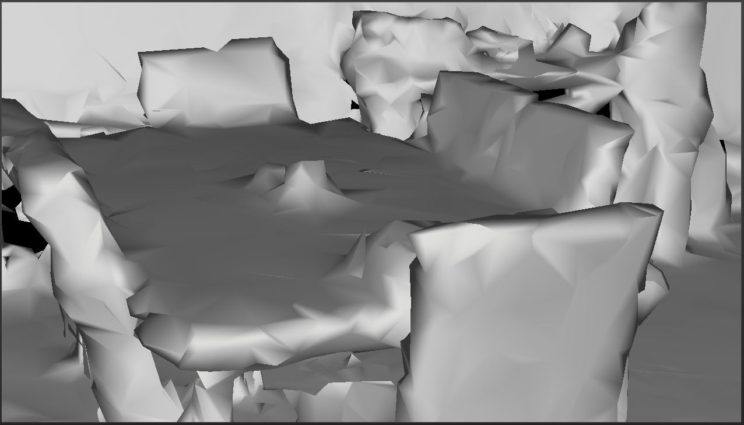

As you can see, it is here that Mesh Options are used; however, those options are also defined here, not passed in. We also have a m_maxTrianglesPerCubicMeter member variable. This is currently set to 1000. It can be adjusted for higher quality or performance; however, I’ve read that going much higher than this doesn’t actually provide a ton more quality.

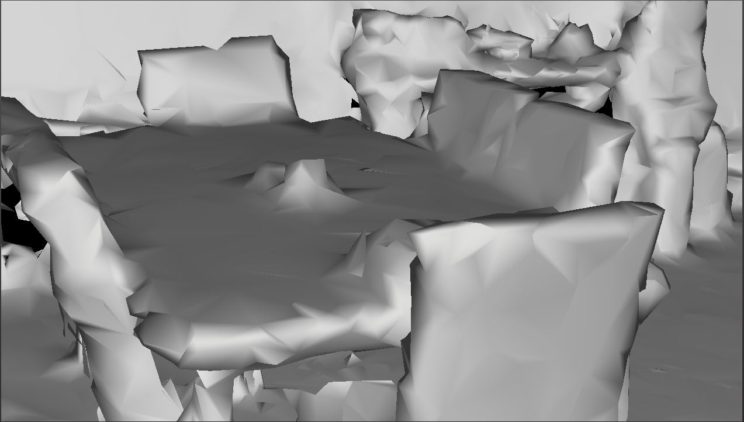

I’ll let you judge for yourself:

The rest of the method just checks if the id is already in the std::map collection and adds it if it isn’t and then updates it with the current data. The SurfaceMesh::UpdateSurface() method literally just assigns the new SpatialSurfaceMesh object to it’s internal pointer. There’s nothing funky going on, so I won’t get into it.

Just to finish up about finding the surface meshes, the Sample code also updates the meshes dynamically using an Event. The following code directly follows the preceding bit.

// We then subcribe to an event to receive up-to-date data. m_surfacesChangedToken = m_surfaceObserver->ObservedSurfacesChanged += ref new TypedEventHandler<SpatialSurfaceObserver^, Platform::Object^>( bind(&HoloLensTerrainGenDemoMain::OnSurfacesChanged, this, _1, _2) ); } } // Keep the surface observer positioned at the device's location. m_surfaceObserver->SetBoundingVolume(bounds); }

The method called on this event looks like this:

void HoloLensTerrainGenDemoMain::OnSurfacesChanged(SpatialSurfaceObserver^ sender, Object^ args) {

IMapView<Guid, SpatialSurfaceInfo^>^ const& surfaceCollection = sender->GetObservedSurfaces();

// Process surface adds and updates.

for (const auto& pair : surfaceCollection) {

auto id = pair->Key;

auto surfaceInfo = pair->Value;

// Choose whether to add, or update the surface.

// In this example, new surfaces are treated differently by highlighting them in a different

// color. This allows you to observe changes in the spatial map that are due to new meshes,

// as opposed to mesh updates.

// In your app, you might choose to process added surfaces differently than updated

// surfaces. For example, you might prioritize processing of added surfaces, and

// defer processing of updates to existing surfaces.

if (m_meshRenderer->HasSurface(id)) {

if (m_meshRenderer->GetLastUpdateTime(id).UniversalTime < surfaceInfo->UpdateTime.UniversalTime) {

// Update existing surface.

m_meshRenderer->UpdateSurface(id, surfaceInfo);

}

} else {

// New surface.

m_meshRenderer->AddSurface(id, surfaceInfo);

}

}

// Sometimes, a mesh will fall outside the area that is currently visible to

// the surface observer. In this code sample, we "sleep" any meshes that are

// not included in the surface collection to avoid rendering them.

// The system can including them in the collection again later, in which case

// they will no longer be hidden.

m_meshRenderer->HideInactiveMeshes(surfaceCollection);

}

Rendering the Surface Meshes

I don’t want to waste too much time here. I didn’t write the code and I haven’t changed it. I simply copied the classes and shaders over from the Sample.

The shader code is pretty straight forward. One thing of note is that the cameraPosition and lightPosition variables that were added to the ViewProjectionConstantBuffer structure are not actually set or used anywhere. I’m not sure why Microsoft has them in the sample at all.

The Sample code is set up to be able to render the surface mesh both in wire frame and solid. I’ve hard-coded it to solid as the wire frame is hard to read.

In terms of what does what, the RealtimeSurfaceMeshRenderer loads and manages the shaders, and the SurfaceMesh class manages the vertex, index, and normal buffers for each surface.

This is all pretty typical DirectX code that we’ve been over before, so I’ll skip it here.

I think that’s about all I want to cover for today’s post. With what we’ve been over so far, we can now load and render the surface meshes. We also have the terrain rendering at the same time, although it is still just floating in space in front of us. You can see a shot of that in the Featured Image at the top.

I’ll also need to add code so that the meshes occlude the terrain when we’re NOT rendering them. I think I’ll work on this next. So in my next post, my plan is to discuss rendering the surface meshes depth only, as well as playing around with moving some of the above code around so our Update() method isn’t so bulky.

For more information on Spatial Mapping, see here and here.

For the latest version of the code, go to GitHub.

Traagen

Hey,

do you maybe know if it is possible to aquire correct mesh data without a holographicframe?

I’m currentliy trying to get the mesh data from the hololens and send it to a server to render the environment in a seperate program. To get the mesh data i’m also using the surface observer but instead of a the coordinate system from the referenceframe i’m using the mehtod of the locator to get the current coordinate system. But i’m not sure if the mesh data is correct. Firstly if draw the meshes (for example first mesh collection) they overlap and if include the second meshcollection i get meshes that look identical to some meshes from the first collection.

I should mention that i’m updating the surfaceobersrver manually, so i’m calling the moment the server is ready to receive more meshes.

Best regards,

Waldemar

I am by far no expert and haven’t looked at this stuff in quite a while, but if I am understanding you, I think you do need the holographic frame of reference. I believe the HoloLens gets surface mesh data relative to its current position and orientation. You can transform the resulting data into a different coordinate system, perhaps a stationary global system, but I think you would still need to use the current coordinate system of the device to get valid surface mesh data.

Have you seen this article from Microsoft?

https://docs.microsoft.com/en-us/windows/mixed-reality/coordinate-systems

It outlines various frames of reference and some pros and cons.

I’m not clear on what you meant about the first and second mesh collections. Can you clarify that? Also, I’m not sure what method you are using to get the current coordinate system. Is that a method of SpatialLocator? I didn’t see any such method in the docs for that.

The “mesh collections” are the meshes I receive when the GetObservedSurfaces() method ist called, since I’m doing it manually and not with the OnSurfacesChanged event.

I tried to get the current coordinate system with the “CreateStationaryFrameOfReferenceAtCurrentLocation” method from the SpatialLocator and over that mehtod I can aquire a coordinate system. My thought was to get the current coordinate system like that, acquire the meshes for this coodinatesystem and transform the previous meshes accordingly.

Currently I’m reworking the project to work with a HolographicFrame and I would like to ask you if it is neccessary to update all existing meshes with the newly aquired coordinate system and not with a base coordinate system which is created for the first meshes?

I ask this question due to the lack of my understanding about the SpatialLocatorAttachedFrameOfReference.

Also, thanks for the reply 🙂

You thank me for a reply and then I fail to reply to you for 3 weeks. Go figure. I hope you figured this out or found help elsewhere and aren’t waiting on me.

My understanding of what you are doing is that you are rendering the collected meshes in a separate system rather than rendering them on the Hololens. Given that, I would say that yes you should be able to use a base coordinate system and translate all new meshes to that coordinate system. To render on the Hololens, you would need to transform back to a current coordinate system gotten from the Attached Frame of Reference, but if this isn’t your goal, then I don’t see any reason to do so.

I think you are going to wind up with a lot of overlapping meshes, as it sounds like you have already discovered. My understanding is that the HoloLens breaks the world up into volumes and creates separate meshes for each volume. As you look around the world and new spaces come into view, the meshes generated for those spaces may represent parts of the same objects in previous meshes, but they will be separate. The plane generation code Microsoft provides does a bunch of merging of the found planes, but if you want to stitch together the raw meshes, I think you would have to handle that yourself.