Last post, I ran into the problem of not having enough options for input as I had things to control. Today, we fix that.

All of the HoloLens sample code I’ve seen from Microsoft uses the same SpatialInputHandler class. It wraps a SpatialInteractionManager and handles an On Source Pressed event. When handling the event – which covers basically any type of input, from a simple Air Tap gesture to pressing a button on an input device, to a basic speech control – the class just stores the state of the source. Then, the Main class will check the Input Handler for any recent input on Update.

The problem with this is that we’re not handling the input event when it happens. We’re storing it and handling it in the next frame. This wouldn’t be an issue, until we look at how we’re going to handle input moving forward.

The Gesture Recognizer

The existing Spatial Input Handler works with simple source events where you just get a source type and state back from the event. You could consider it a lower level handler in that regard. If you wanted to create custom gestures, this may be how to do it; however, Microsoft provides a class, the SpatialGestureRecognizer, which will recognize and allow handling of some basic built in gestures. This class gives us plenty of inputs for this project. We can recognize a tap, a double tap, and ‘hold’ related gestures that allow moving holograms or navigating menus.

Microsoft intended for each hologram to have it’s own Gesture Recognizer, so that you can target specific gestures for specific holograms. As such, you want to handle the Interaction Manager’s InteractionDetected event. That event provides you with a SpatialInteraction object, which includes a source state with a SpatialPointerPose object that we can use to get the current user location and gaze direction. We’re then supposed to route the Interaction to the appropriate hologram by tracing the gaze ray and intersecting it with the holograms in the scene. Once this is done, we can send the Interaction to that hologram’s Gesture Recognizer via the CaptureInteraction() method.

I tried following the same structure as Microsoft had provided in their own Input Handler class, simply adding a handler for the new event and saving the Interaction. I then updated the CheckForInput() method to return the Interaction instead of just a Source State. On Update, I would check for input as usual, and pass the returned Interaction to the terrain’s Gesture Recognizer.

The application threw this exception as soon as I clicked. Or rather, it threw the exception on the very next frame when it tried to actually act on the Interaction event caused by my click. It turns out those Interactions can’t really be saved like that. Maybe I could have jumped through some hoops to make a copy that wouldn’t have gotten destroyed when the event finished, but that seems like more headache than it would be worth.

The thing about that SpatialInputHandler class is that it is really just a wrapper for the SpatialInteractionManager. It doesn’t add anything. It doesn’t do any real processing of the event. It can’t really. It doesn’t, and really shouldn’t, know anything about the objects that exist in our virtual world. All it can do is save the state at the time of the input and wait for the Main class to request that information. Then the Main class can actually handle the event.

So why not cut out the middle man? I added the Interaction Manager to the Main class and handled the InteractionDetected event right there. Now, when the event triggers, I can immediately pass it to the terrain. No more exception.

void HoloLensTerrainGenDemoMain::OnInteractionDetected(SpatialInteractionManager^ sender, SpatialInteractionDetectedEventArgs^ args) {

// if the terrain does not exist, or the interaction does not apply to the terrain,

// then send the interaction to the gui manager.

if (!m_terrain || !m_terrain->CaptureInteraction(args->Interaction)) {

m_guiManager->CaptureInteraction(args->Interaction);

}

}

the GUIManager class is just a stub right now. It basically handles any input not meant for the terrain. Eventually, I’ll add actual GUI elements that we’ll be able to use to adjust certain variables. In the mean time, if the GUIManager registers a tap or a double tap, it simply toggles rendering the surface meshes and whether they are rendered solid or in wireframe.

void GUIManager::OnTap(SpatialGestureRecognizer^ sender, SpatialTappedEventArgs^ args) {

switch (args->TapCount) {

case 1:

renderWireframe = !renderWireframe;

break;

case 2:

renderSurfaces = !renderSurfaces;

break;

}

}

bool HoloLensTerrainGenDemoMain::Render(Windows::Graphics::Holographic::HolographicFrame^ holographicFrame) {

//...

// Only render world-locked content when positional tracking is active.

if (cameraActive) {

m_meshRenderer->Render(pCameraResources->IsRenderingStereoscopic(), m_guiManager->GetRenderWireframe(), !m_guiManager->GetRenderSurfaces());

//...

}

}

One thing of note here is that you probably don’t want to register both tap and double tap events. The reason is that a tap event is always registered prior to a double tap event. So in my case, I toggle wire frame rendering every time I toggle surface mesh rendering. This is fine for me, because it is symmetric. If I toggle surface mesh rendering off and then back on again, I will also toggle wire frame rendering off and back on. I end up in the same state I left off in. Not every situation will work so reasonably. And its temporary anyway.

So how do we actually handle the interaction to get to that OnTap() method? In the simplest case, we just pass it on to the Gesture Recognizer.

void GUIManager::CaptureInteraction(SpatialInteraction^ interaction) {

m_gestureRecognizer->CaptureInteraction(interaction);

}

The Gesture Recognizer will check the incoming interaction and compare it to the list of gestures it is configured to recognize. I set this up in the constructor, but you can actually change the recognized gestures dynamically using TrySetGestureSettings().

GUIManager::GUIManager() {

// Set up a general gesture recognizer for input.

m_gestureRecognizer = ref new SpatialGestureRecognizer(SpatialGestureSettings::DoubleTap | SpatialGestureSettings::Tap | SpatialGestureSettings::Hold);

m_tapGestureEventToken =

m_gestureRecognizer->Tapped +=

ref new Windows::Foundation::TypedEventHandler<SpatialGestureRecognizer^, SpatialTappedEventArgs^>(

std::bind(&GUIManager::OnTap, this, _1, _2)

);

m_holdGestureCompletedEventToken =

m_gestureRecognizer->HoldCompleted +=

ref new Windows::Foundation::TypedEventHandler<SpatialGestureRecognizer^, SpatialHoldCompletedEventArgs^>(

std::bind(&GUIManager::OnHoldCompleted, this, _1, _2)

);

}

As you can see, I’ve configured my GUI Manager to recognize tap, double tap, and Hold. It doesn’t currently do anything on Hold, but I left it in anyway. Depending on the gestures you set, you need to handle various events. In our case, we really just need the Tapped event. It will return the number of taps, so the event handler can handle both single and double taps, as I had shown above.

The Terrain class looks basically the same, except I only handle single taps and all I do on tap is reset the height map. The big difference is that we only want to handle the Interaction in the terrain at all if the user is looking at the terrain.

Intersecting the Gaze Ray with the Terrain

Here’s the Terrain’s CaptureInteraction() method:

bool Terrain::CaptureInteraction(SpatialInteraction^ interaction) {

// Intersect the user's gaze with the terrain to see if this interaction is

// meant for the terrain.

auto gaze = interaction->SourceState->TryGetPointerPose(m_anchor->CoordinateSystem);

auto head = gaze->Head;

auto position = head->Position;

auto look = head->ForwardDirection;

// calculate AABB for the terrain.

AABB vol;

vol.max.x = m_position.x + m_width;

vol.max.y = m_position.y + FindMaxHeight();

vol.max.z = m_position.z + m_height;

vol.min.x = m_position.x;

vol.min.y = m_position.y;

vol.min.z = m_position.z;

if (RayAABBIntersect(position, look, vol)) {

// if so, handle the interaction and return true.

m_gestureRecognizer->CaptureInteraction(interaction);

return true;

}

// if it doesn't intersect, then don't handle the interaction and return false.

return false;

}

As you can see, we pull the current user location and gaze direction out of the Interaction. I was happy to see that I could automatically have it adjust that gaze to match the coordinate system of our anchor. That certainly simplifies the math.

We then build a simple Axis-Aligned Bounding Box for the terrain. Currently, the variable m_position stores the origin for the terrain, which was located such that the Anchor would actually be at the center of the terrain. The maximum bounds are simply set by adding the height and width in world values to the position. The FindMaxHeight() method just finds the highest height value stored in the height map.

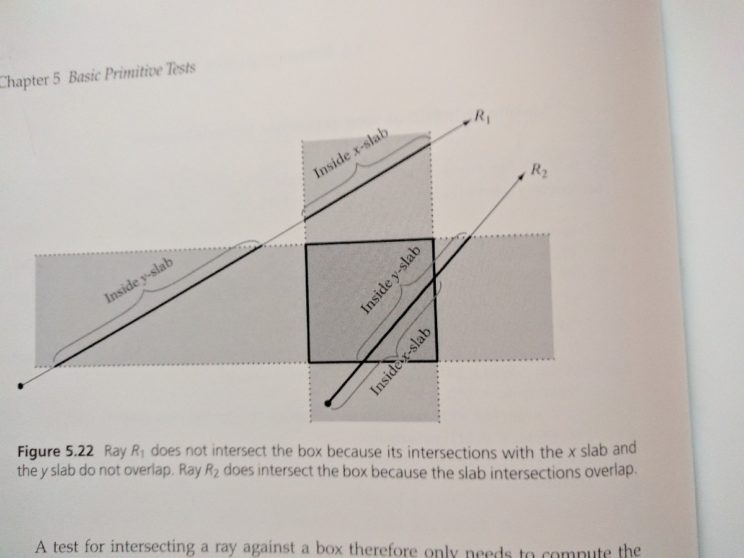

We can then pass this data to a simple Ray-AABB intersection test.

bool RayAABBIntersect(float3 p, float3 d, AABB a) {

float tmin = 0.0f;

float tmax = FLT_MAX;

// X slab.

if (fabsf(p.x) < EPSILON) {

// ray is parallel to slab. No hit if origin not within slab.

if (p.x < a.min.x || p.x > a.max.x) {

return false;

}

} else {

// compute intersection t value of ray with near and far plane of slab.

float ood = 1.0f / d.x;

float t1 = (a.min.x - p.x) * ood;

float t2 = (a.max.x - p.x) * ood;

// make t1 be intersection with near plane, t2 with far plane.

if (t1 > t2) {

Swap(t1, t2);

}

// compute the intersection of slab intersection intervals.

tmin = fmaxf(tmin, t1);

tmax = fminf(tmax, t2);

// exit with no collision as soon as slab intersection becomes empty.

if (tmin > tmax) {

return false;

}

}

// Y slab.

if (fabsf(p.y) < EPSILON) {

// ray is parallel to slab. No hit if origin not within slab.

if (p.y < a.min.y || p.y > a.max.y) {

return false;

}

}

else {

// compute intersection t value of ray with near and far plane of slab.

float ood = 1.0f / d.y;

float t1 = (a.min.y - p.y) * ood;

float t2 = (a.max.y - p.y) * ood;

// make t1 be intersection with near plane, t2 with far plane.

if (t1 > t2) {

Swap(t1, t2);

}

// compute the intersection of slab intersection intervals.

tmin = fmaxf(tmin, t1);

tmax = fminf(tmax, t2);

// exit with no collision as soon as slab intersection becomes empty.

if (tmin > tmax) {

return false;

}

}

// Z slab.

if (fabsf(p.z) < EPSILON) {

// ray is parallel to slab. No hit if origin not within slab.

if (p.z < a.min.z || p.z > a.max.z) {

return false;

}

}

else {

// compute intersection t value of ray with near and far plane of slab.

float ood = 1.0f / d.z;

float t1 = (a.min.z - p.z) * ood;

float t2 = (a.max.z - p.z) * ood;

// make t1 be intersection with near plane, t2 with far plane.

if (t1 > t2) {

Swap(t1, t2);

}

// compute the intersection of slab intersection intervals.

tmin = fmaxf(tmin, t1);

tmax = fminf(tmax, t2);

// exit with no collision as soon as slab intersection becomes empty.

if (tmin > tmax) {

return false;

}

}

// ray intersects all 3 slabs.

return true;

}

}

This test was taken from Christer Ericson’s 2005 book Real Time Collision Detection.

The idea behind the algorithm is to take each axis and intersect the ray with it, essentially in one dimension. Each axis is treated as a ‘slab’ defined by the planes of the AABB on that axis. As we check each axis, we can track the intersection space within the tmin and tmax variables. As long as tmax is bigger than tmin, then the intersection of the ray with the slabs lies within the intersection of all three slabs.

The result is that if you air tap while the terrain is reasonably centered in your view, the terrain will reset. If you tap while looking at anything else, you’ll toggle the surface meshes.

The only concern I have now is whether I’ll need to change this code once I have found the horizontal planes and anchored the terrain to a surface. Currently, the terrain is aligned with the coordinate system of the Spatial Anchor. The Anchor is aligned with the world coordinate system when the terrain is initialized. In other words, everything is aligned with the direction you are looking in when the terrain is first spawned. We’ll need the terrain to be aligned with the surface it is anchored to, which means figuring out how to determine that alignment.

But first, I need to actually find the planes. I intend for that to be my next post. I may split off this alignment stuff into a second post on the subject. That will depend, I suppose, on just how difficult it turns out to be. If I basically get it for free, then one post will be enough.

For the latest version, see GitHub.

Traagen